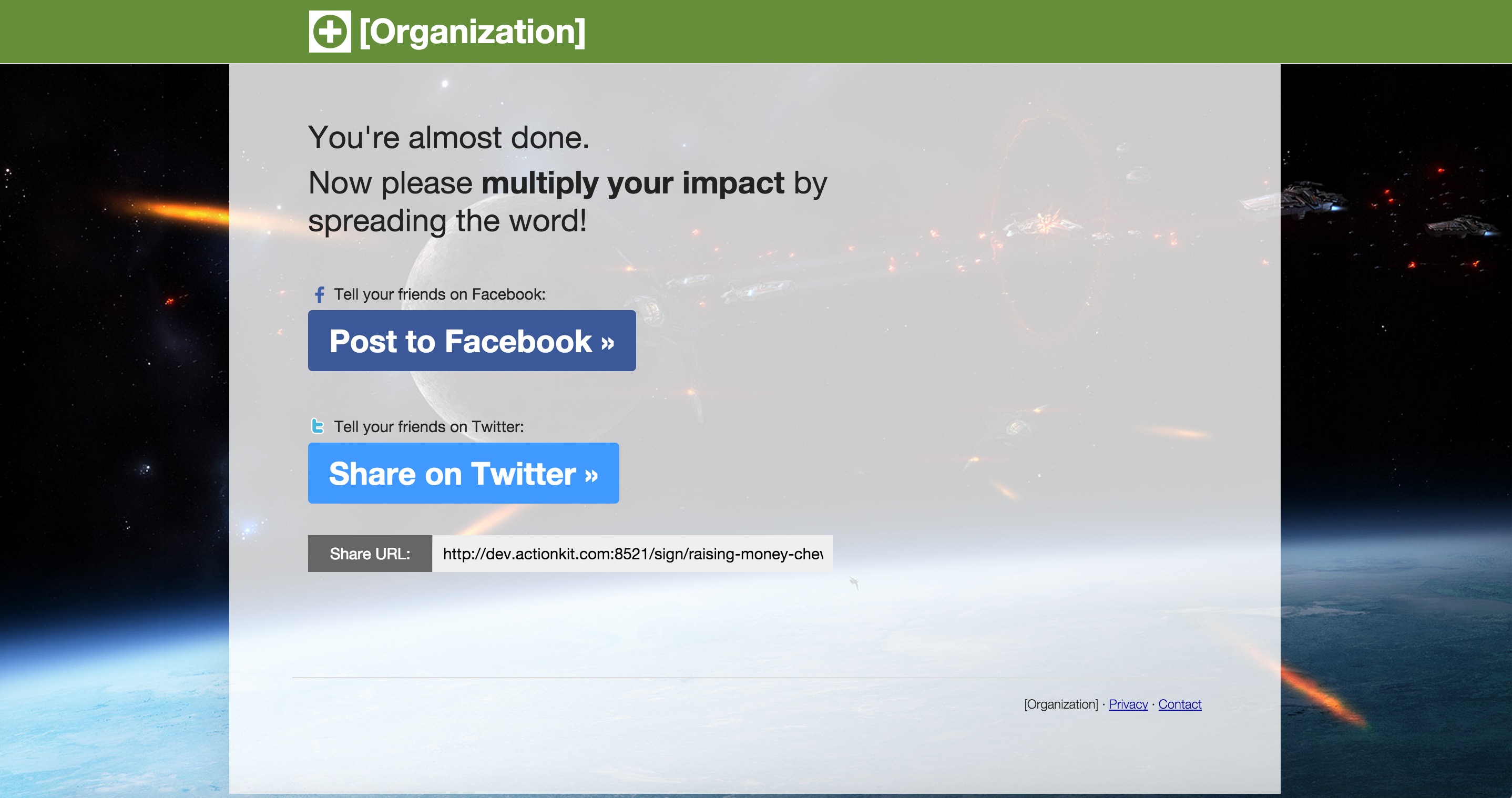

In our last tutorial, we showed you how ActionKit’s a/b testing tools can be used to optimize your action rates. You can use the same tools to test your share page content and layout. The share page is the page the visitor is redirected to after they submit an action, and typically will include a call to action for the user to share the page with their networks.

Let’s look at an example:

In our continuing campaign for justice for Chewbacca, we’re petitioning the Rebel Alliance to get him his medal. To maximize public outcry we want to:

- Test our share page template layout. Would more people share if the page had a spaceship theme with extra-large buttons? Or would they share more if the page looked like the default?

- Test our sharing language for Facebook and Twitter to see which images and share text pull in more new signers.

It’s easy to test more than one thing on a page in ActionKit.

Setting up the first experiment: testing design

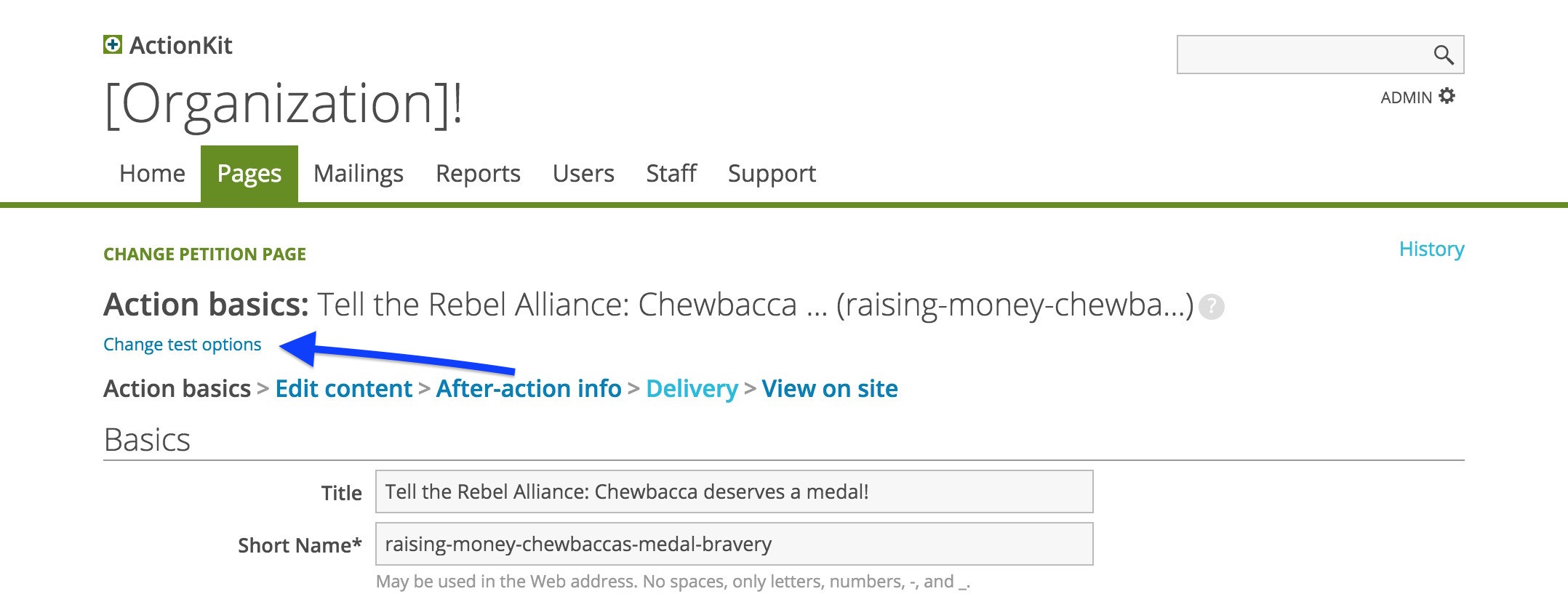

Start by adding the first test:

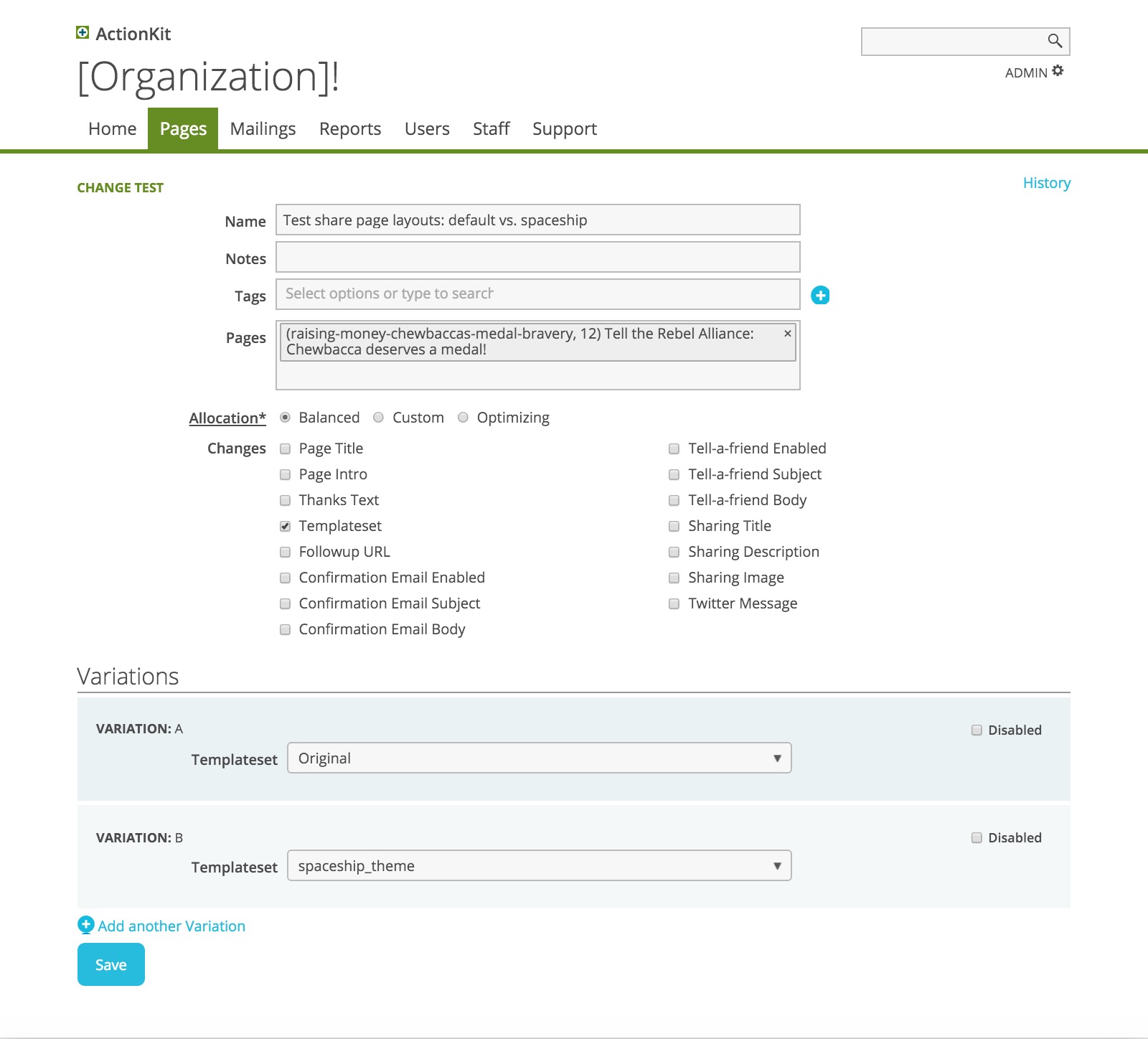

The layout with the spaceship theme and extra-large buttons is reflected in one of the templatesets, so that’s what you’ll select for testing:

Last time we used the default allocation of balanced. Now let’s look at what the other allocations do.

A custom allocation lets you edit the numeric weights on each variation and divide up users according to their relative weights.

There are a couple of reasons why you might decide to use a custom allocation. First, there might be a high-risk, radical change to the page that you’re unsure of, but which you’d still like to test. You can minimize the risk by allocating only 20% of your visitors to this new variation.

Alternatively, perhaps there’s a new variation — a call-to-action popup, perhaps — which won a recent A/B test. However, you’d like to validate it over the long term, since user behavior does change, and you want to make sure it doesn’t eventually have a negative effect. You can allocate 95% of visitors to the popup, and leave 5% for the old variation that did not have a popup.

Note: If you’ve set a custom allocation at the beginning of an experiment, make sure you don’t change the allocation percentages in the middle of an experiment! Doing so will invalidate your results.

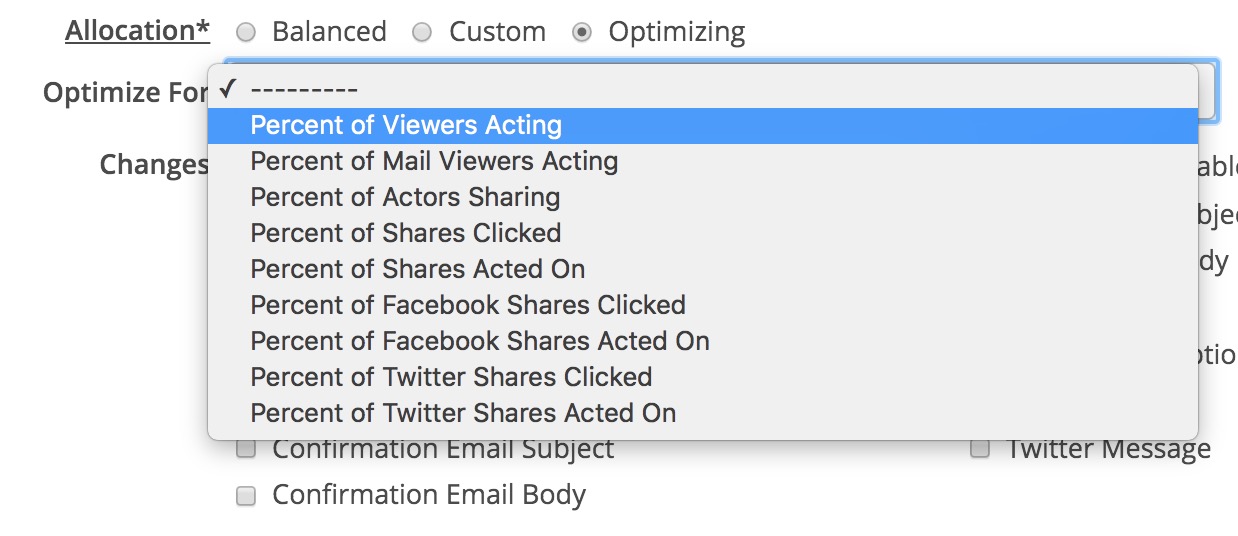

Finally, the optimizing allocation option lets you optimize for a particular conversion goal such as percent of shares acted on or percent of viewers acting. ActionKit will automatically adjust the numeric weights and send users to the best-performing variation based on the goal you’ve set.

In this example, let’s choose optimizing for our allocation, and select percentage of shares acted on as the statistic we want to optimize on.

Now that you’ve made your selections, click Save. You’ll be taken to the Test Dashboard.

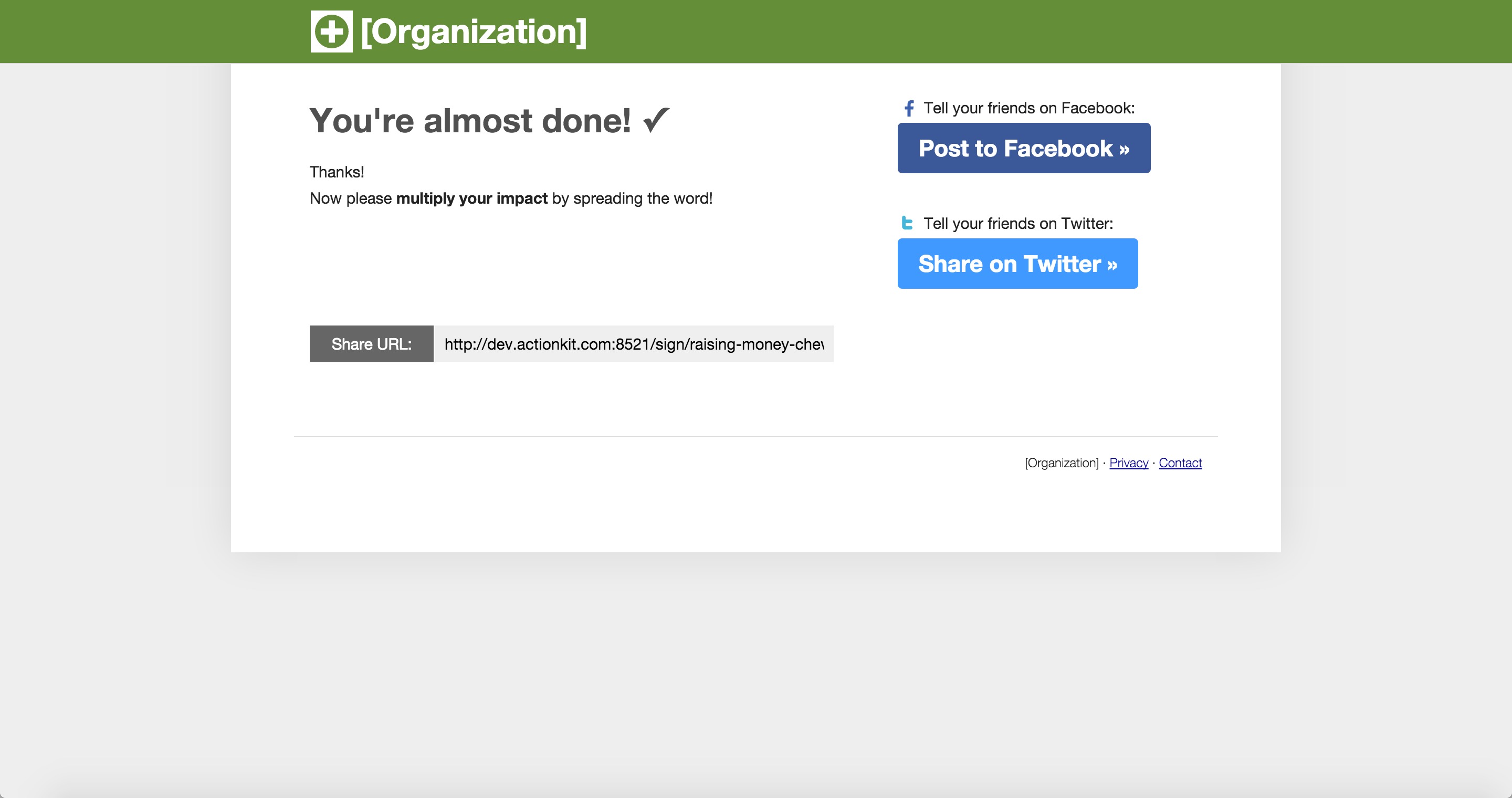

Click on View in order to preview each variation. Submit your signature to view the after-action page, where you’ll land on the variation you’ve chosen to preview.

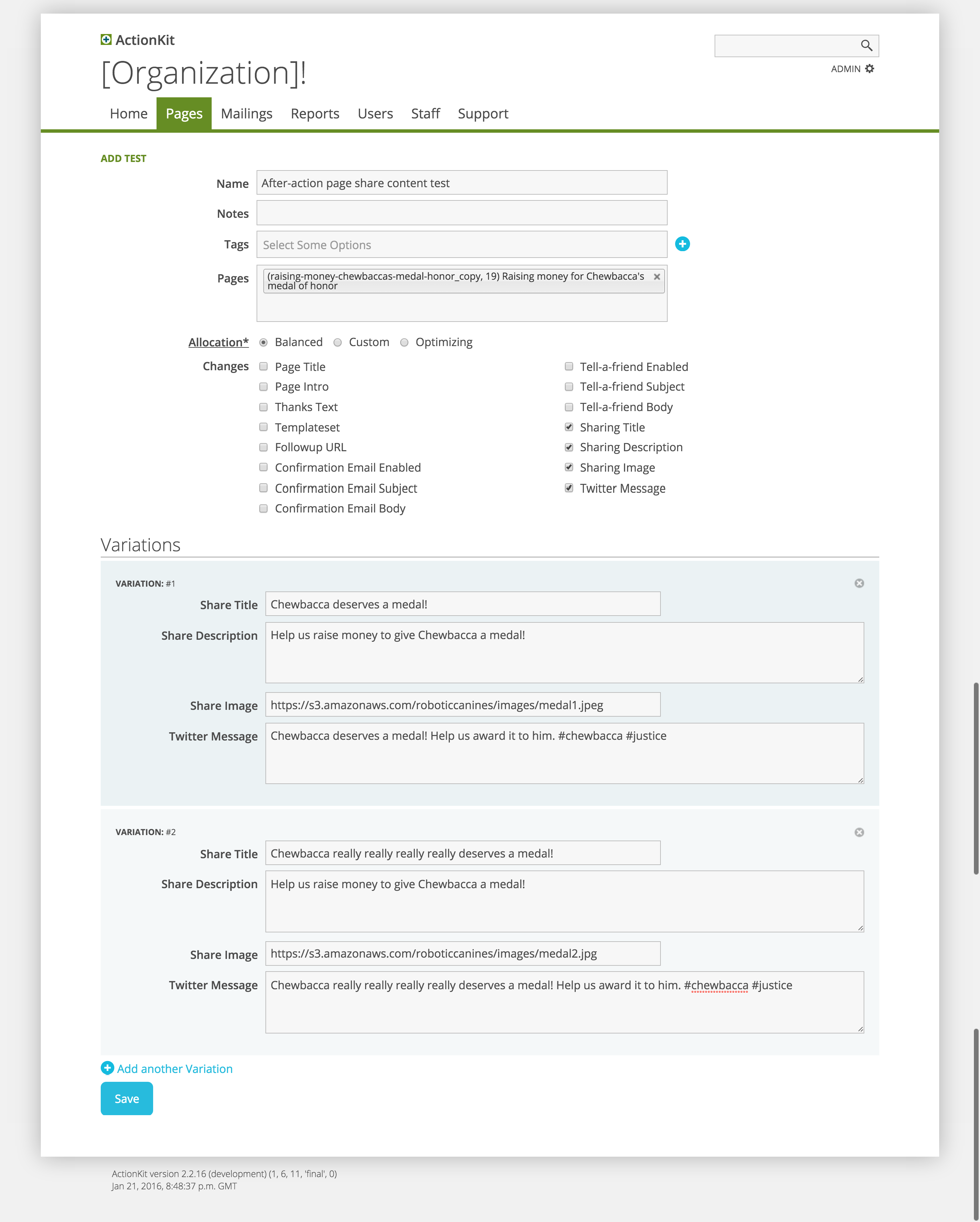

Setting up the second experiment: testing share content

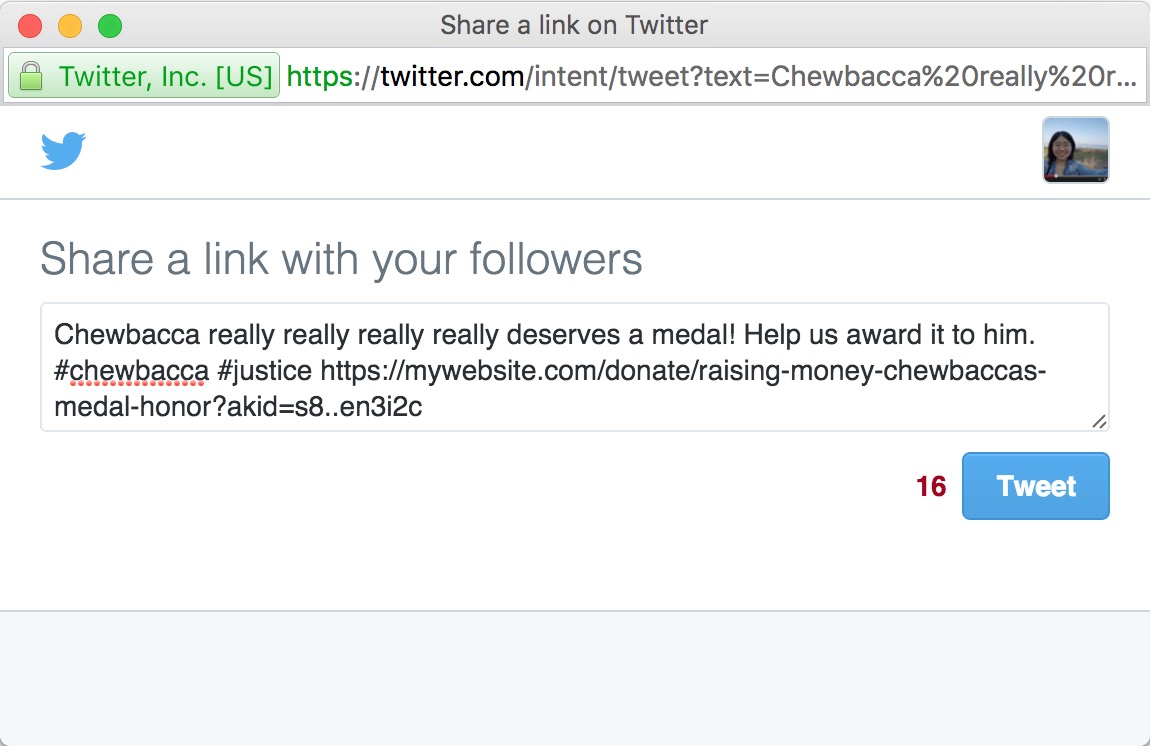

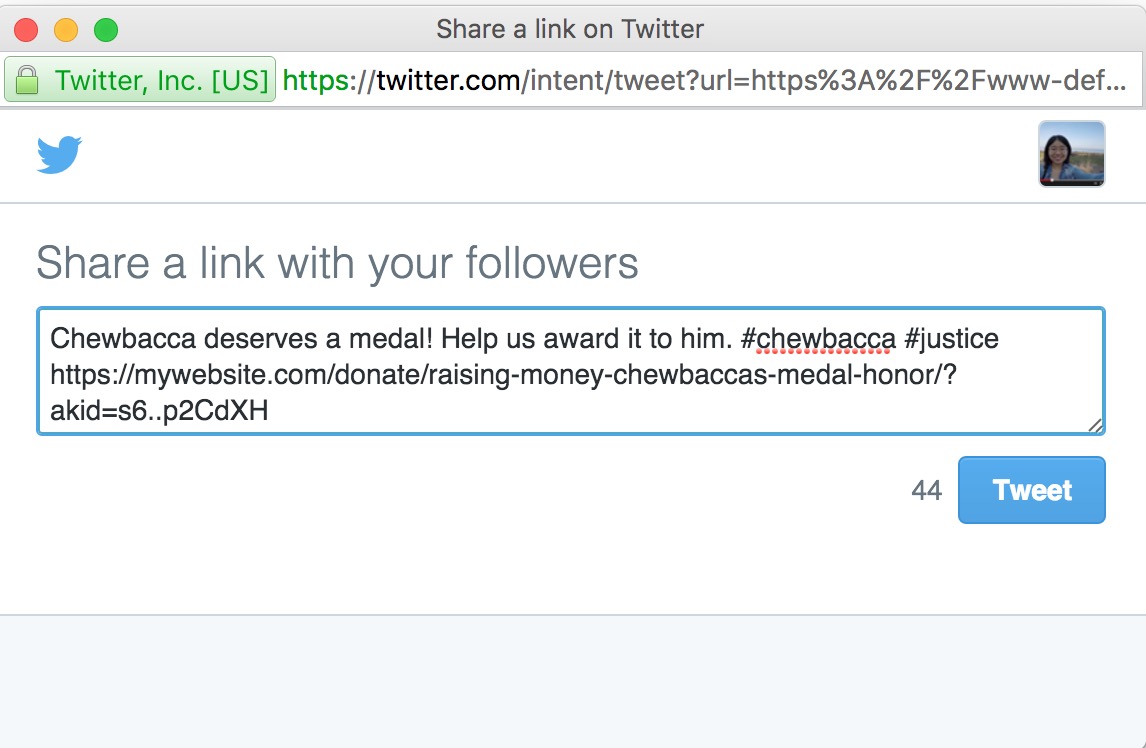

Our second experiment tests different sharing titles, sharing descriptions, images, and twitter messages on the after-action page.

Below we’ve added only two variations, but you can add as many variations as you’d like.

Once you save this experiment, you can preview its variations.

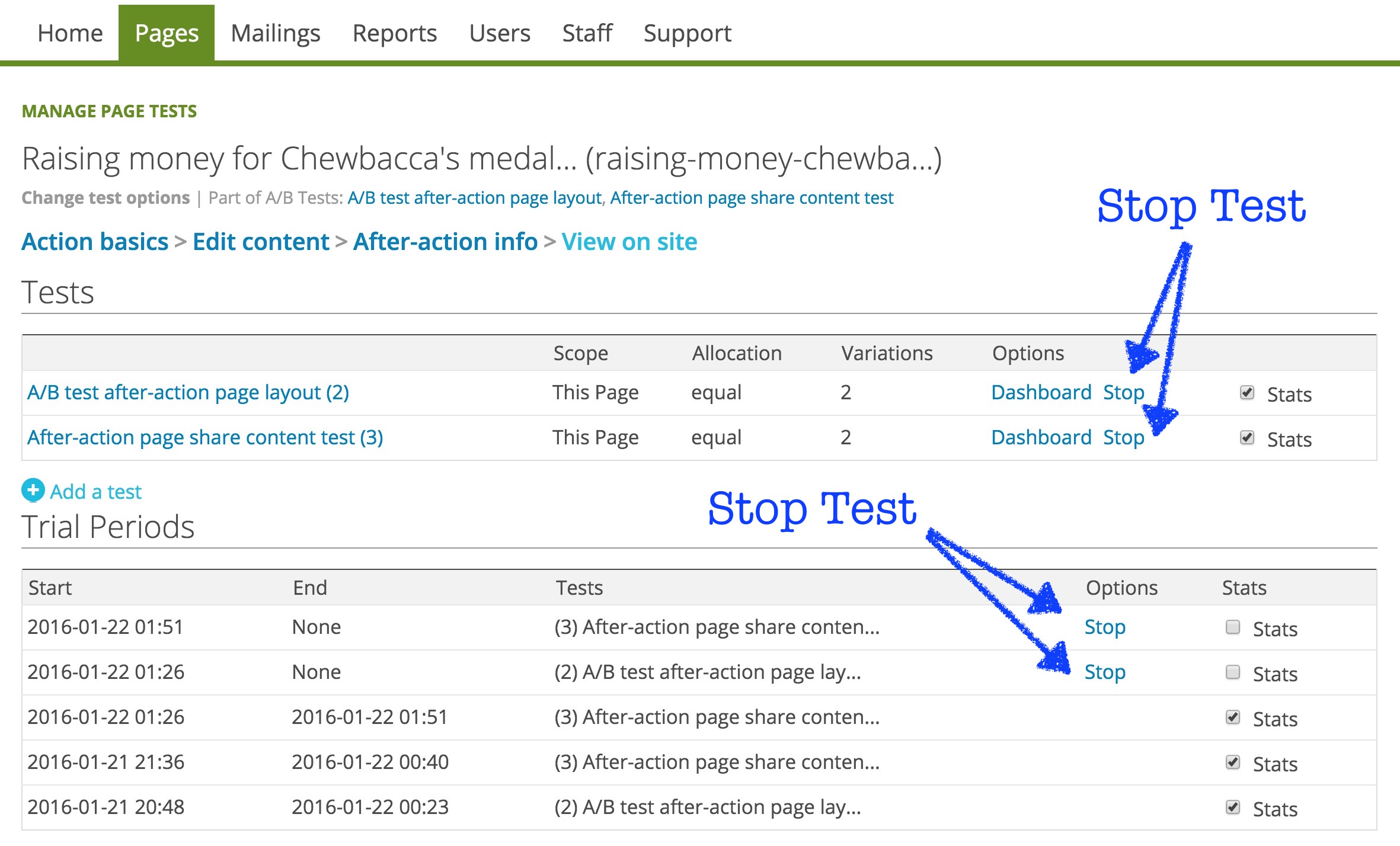

Running multiple tests at the same time

The tests that are currently active will show up in the meta summary below your page title. You can have multiple tests running at the same time.

If you click to stop any of the tests, the links to their individual dashboards will disappear from the meta summary.

Stats

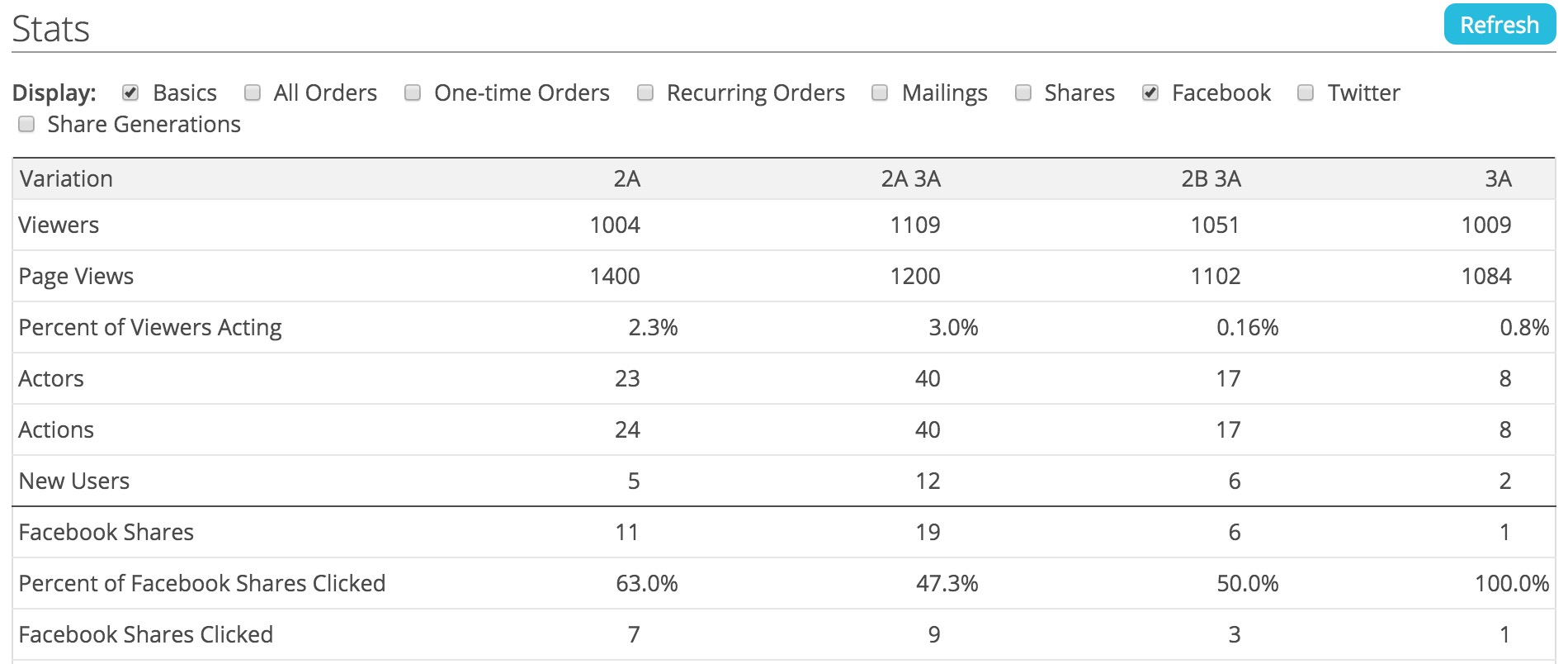

If you’re running two different tests at the same time, the stats dashboard will reflect each of the cross-variations.

The dashboard gives you some stats you might find particularly helpful:

- the number of new users generated from a particular variation.

- the actions from shares

- the percent of shares acted on (the # of actions divided by the # of shares)

- the new users from shares

Since we’ve chosen an optimizing allocation, ActionKit will automatically siphon a larger and larger percentage of visitors to the winning variation.

Once it’s reached statistical significance, you can stop the test and apply it.